Walking and running in Unreal

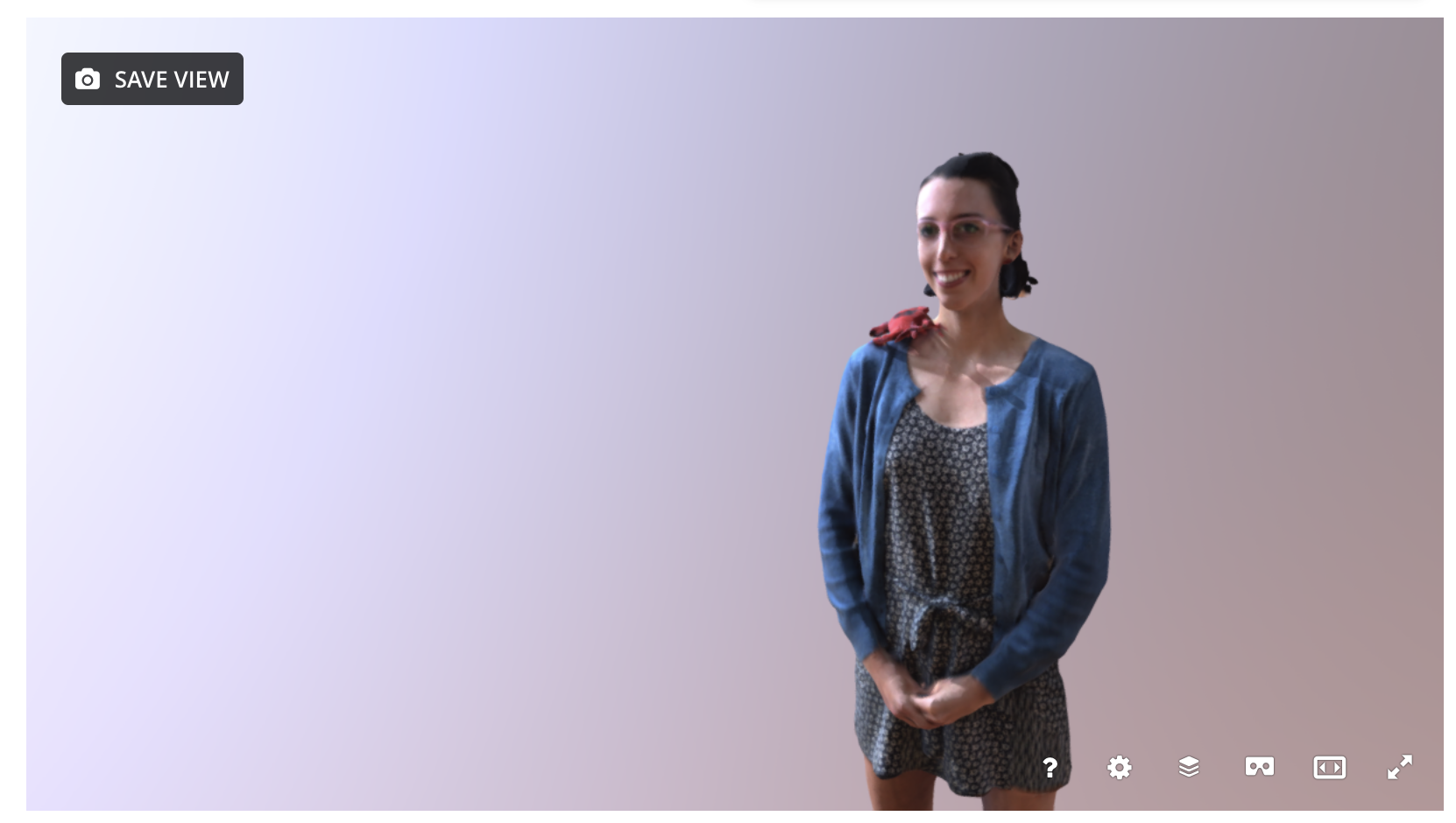

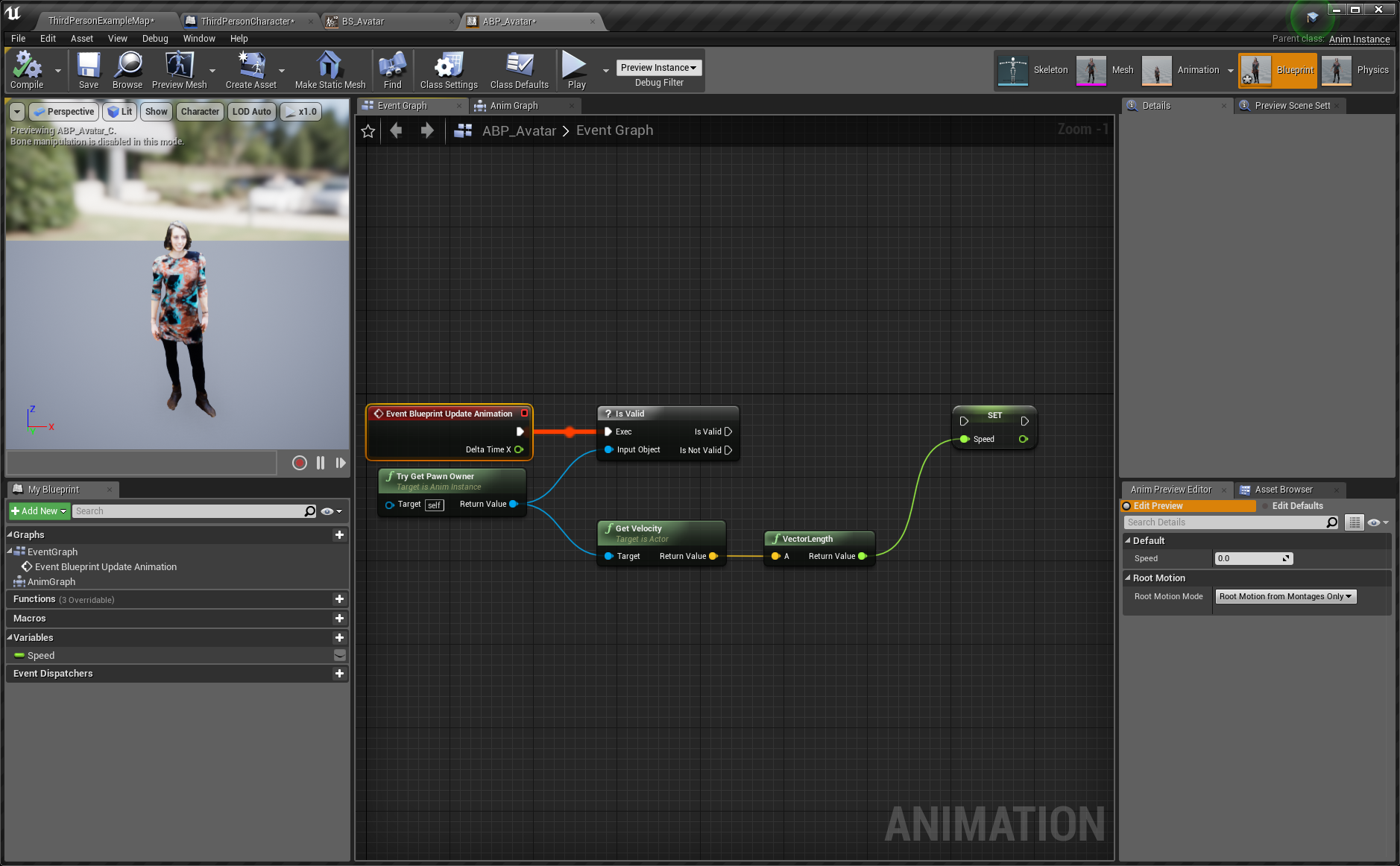

Following the tutorial I made a third person character that can run and kick when the 1 key is pressed. I also found out I can run and kick at the same time:

Then I gave the character the superpower of doing all these things while looking at their phone:

I first chose this run simply because I thought it was funny. I chose the kick because I don’t get to do this very often in real life. I liked this combination because the run looks really out of control and then the character pulls it together and delivers a crazy kick.

I merged the run with the touch screen animation on the upper body so that I could create a game simulating everyone walking around on their phones, but it’s better than real life because now you can run without looking up from your phone! I also liked that in this game engine context the phone replaces the standard gun.

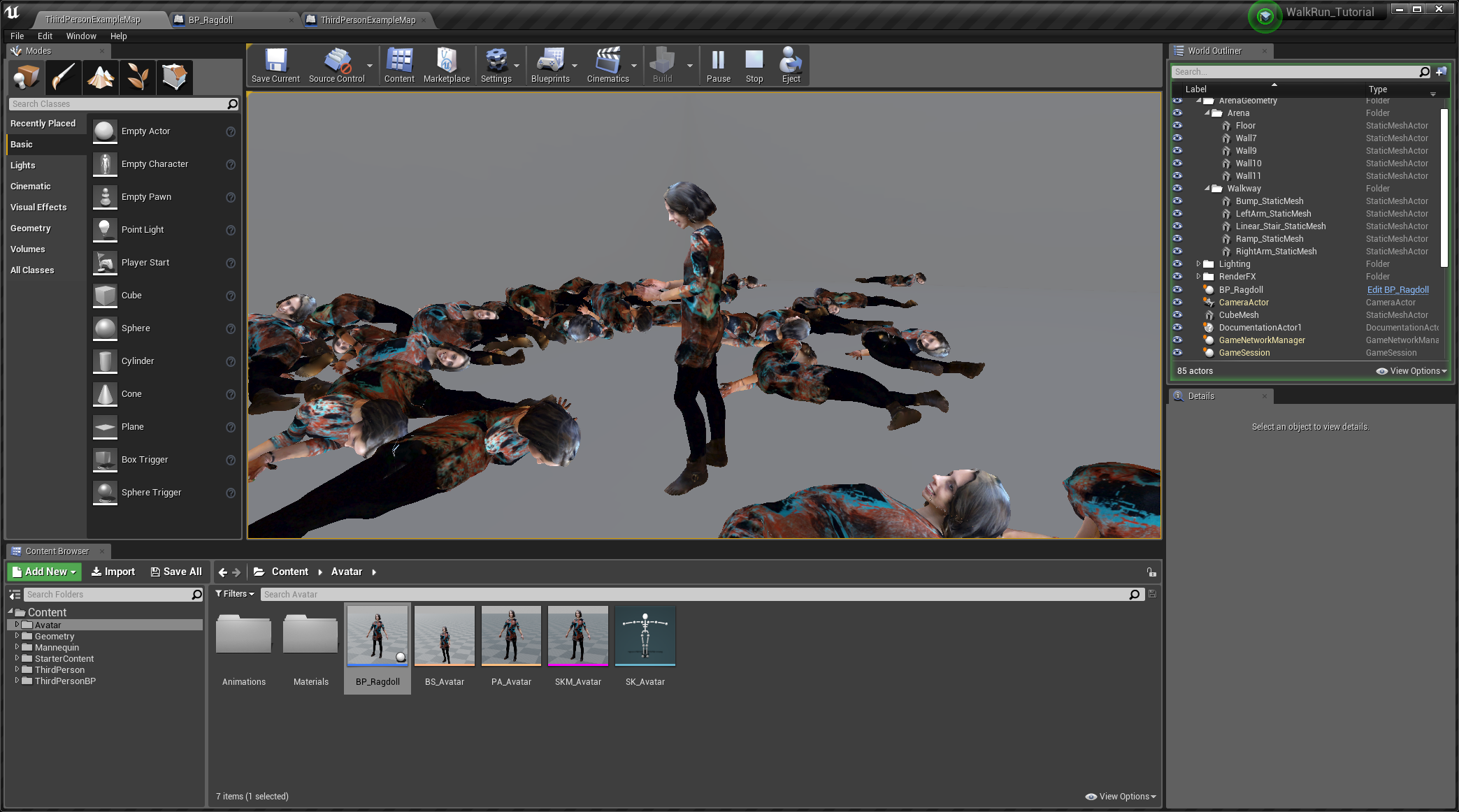

I started to make a garden (from 3D scans I took of a garden by my apartment) that the character could run through and not look at, but I ran out of time:

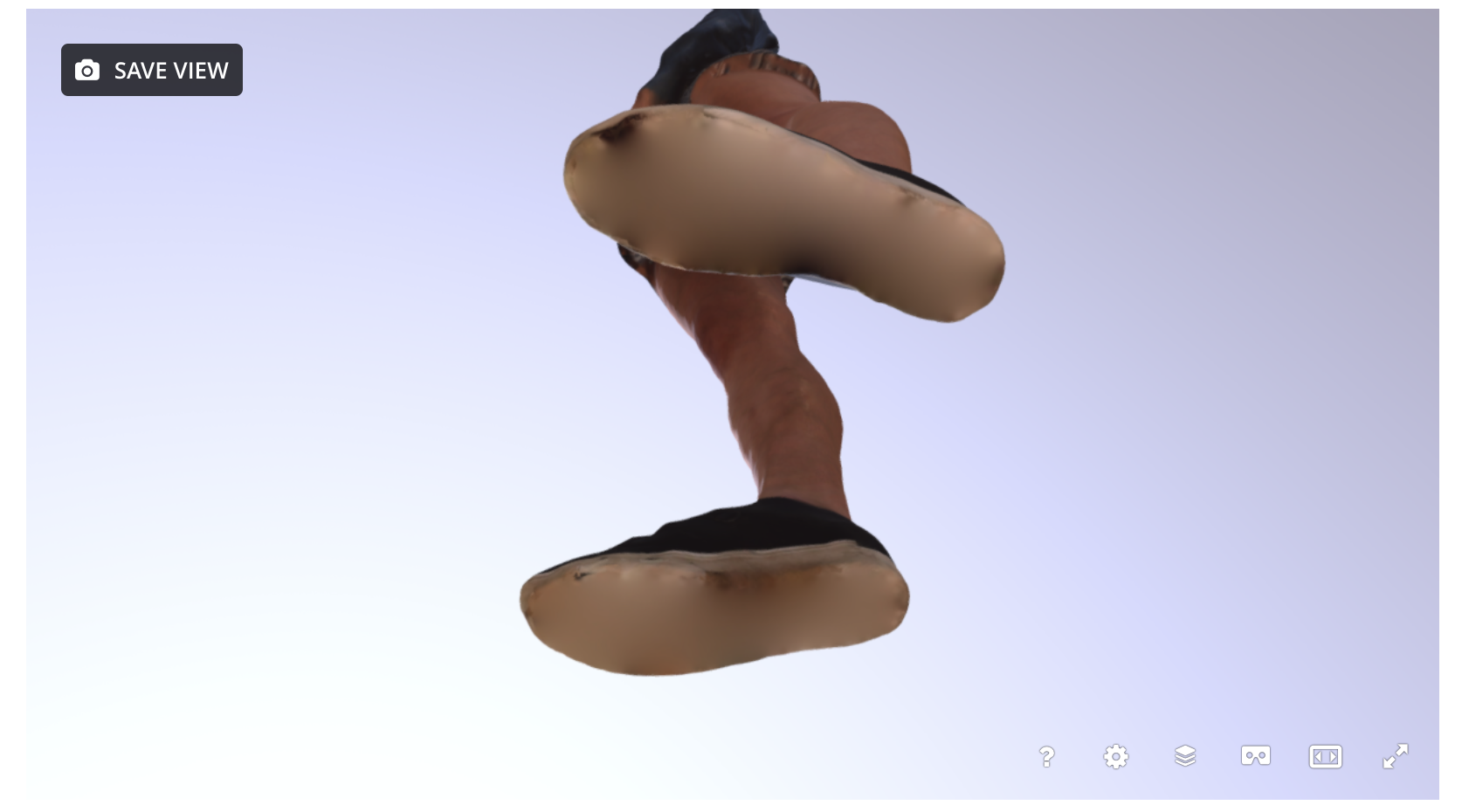

This is my favorite shot though:

Discussion Questions

Hacking the Sims so that they die feels very different (and is discussed in the article differently) than what GTA modders are doing - why is that? I remember my friend (in elementary school probably?) showing me how to take away the swimming pool ladder for example and reflecting on it now I’m trying to figure out how that fits into these other trends and behaviors.

Reading about Wolfson’s piece and then the GTA modders, I was wondering - if the GTA modders want more and more realistic violence, where and when do the two intersect?

![Wrap 3.4.8 TRIAL PERIOD (2 Days Left) [ScanMorph_Pipeline_PerformativeAvatars_19_] 11_13_2019 11_10_46 PM.png](https://images.squarespace-cdn.com/content/v1/59d70495e3df282e4e6e029f/1573746351436-I6UOGT3UWBML9I49VZ6S/Wrap+3.4.8+TRIAL+PERIOD+%282+Days+Left%29+%5BScanMorph_Pipeline_PerformativeAvatars_19_%5D+11_13_2019+11_10_46+PM.png)